2025-09-26

the captain

OpenAI announces Pulse, a cronjob personal assistant. This is a product I’ve been awaiting for a while, although I think I would like to have more specific direction over what the agent sends me than what Sama is describing. Anyway, while on the topic of personal agents, Seb Krier has a very interesting piece in Cosmos Institute on the potential for AI personal assistants to bring about a libertarian utopia, along the lines of what Robin Hanson advocates with everything managed by private contracts and insurance markets. These scenarios are often parodied with the argument that the mental costs of negotiating and entering into such agreements means such markets would be very illiquid and therefore inefficient; it’s an interesting counterargument that AI, making decisions at speeds tens of thousands of times faster than you and knowing you better than even yourself, could handle this burden for you to effortlessly maximize your preferences across all of your interactions.

Ben Shindel on recent futarchy experiments on Manifold Markets.

Jack Thompson on preference utilitarianism, arguing against the counterexample of the Experience Machine using the argument that preferences are path-dependant. I don’t know that this argument fully works, because it implies that the integral under the curve matters a lot, when in the grand scope of things that’s more or less a rounding error. It seems to me that the benefits to being a “type of person” are more about one’s expected value calculations towards future actions. There’s something I’ve been thinking about lately, which is that in a many-worlds quantum universe, a sufficiently strong adherence to particular principles would mean, theoretically, that in all similar universes you make the same decisions and remain the same type of person. How much utility do those, like Napoleon, who believe they are destined to succeed, derive from that belief?

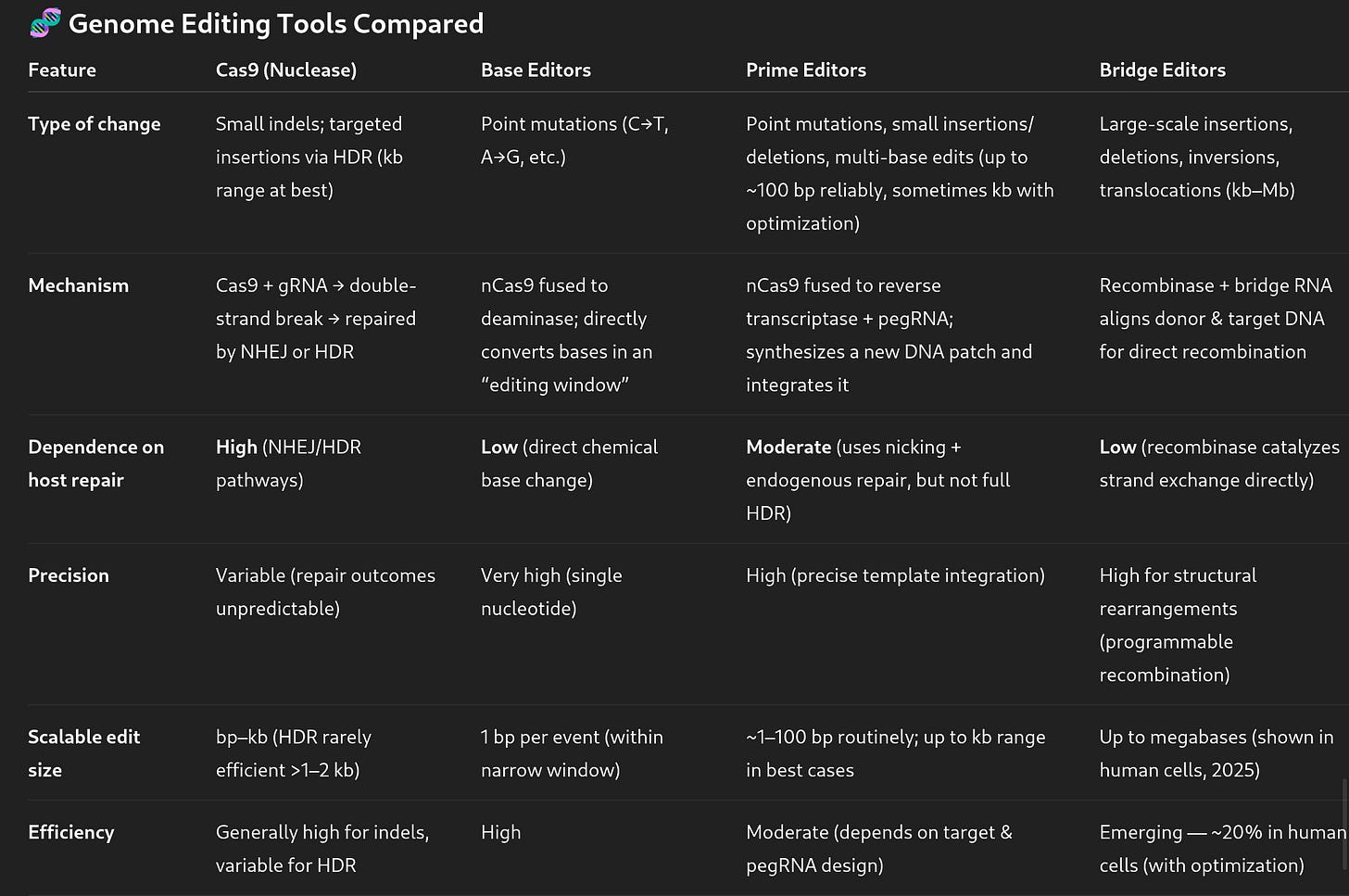

Arc Institute demonstrates bridge editing of the human genome up to 930 kb1. Niko McCarty also has a good explainer.

Alex Kesin on the legal fight around Amgen’s attempt to patent basically every PCSK9 inhibitors, and proposals to prevent similarly overbroad patent attempts in the future.

Adam on how risk-based regulation is just another type of regulatory uncertainty which results in overcompliance.

John Hawks on how to classify the skulls found in the Yunxian hominin site.

Edrith against geographical determinism.

Jeremy Neufeld in the Institute for Progress on why using the “Wage Levels” proposed for assigning H1B visas would be a bad idea.

Luzia Bruckamp reviews the studies on the wage penalty from motherhood.

Rabbit Cavern with an ode to bubble gum.

From ChatGPT (unclear how accurate):

While on the topic of Arc Institute, Patrick Collison has a tweet on why their approach is so successful, Packy’s recent linkthread heavily features their works, and Christina Agapakis expresses enthusiasm for the research style made possible by Evo2.