2025-09-25

copenhagen

BBC reports on gene therapy, which slows Huntington’s Disease progression by 75%. Ruxandra translates this to investing advice, but I’m a little confused because this implies that biotech is somehow not subject to “sell the news” dynamics. If so, it’s not clear to me why this is: is biotech just an extremely inefficient market; or has something changed so that the success rate of biotech companies in hitting target milestones has recently gone up, without yet being internalized by market makers?

Wired article (Twitter thread) on Mindstate Design Labs, which is designing a collection of brain-state altering chemicals.

Cremieux and The Harvard Crimson on the Baccarelli Tylenol review and testimony.

Deena Mousa in Works in Progress on why AI has not been able to replace radiologists.

Afra Wang translates a speech in Hangzhou from the Alibaba CEO on ASI, a break from the trend of Chinese not caring about AI metaphysics. Although I personally wouldn’t read too much into this, since marketers in China are often just as prone to hype and empty promises as their western counterparts. Maybe in some ways even more so, in that they will often directly rerun western copy without any sense of moderation or irony. What will be interesting to see is if they start going off in their own direction.

In the Huggingface daily papers, there’s a claim that for agentic workflows, a small number of high quality examples surpasses training with more data. This is interesting with regard to arguments that AI is fundamentally different from humans because they require enormous amounts of training data, while humans can generalize off single examples. (Edit: My credence for this theory is low on priors, but it would be wild if there’s a sort of Moravaec’s paradox related to things that we normally think of as “generalization”: if it’s learning how to move the fingers that’s hard, but using them in new and creative ways is actually easy. Anyway, there’s an IFP proposal for how to get more data for training AI by Andrew Trask).

Bryan Potter on accidental invention, noting it seems to occur particularly often in chemistry. There seems to be an implication as to which fields are particularly ripe for automated discovery, where the experimental landscape is opaque but also featureful.

Stratechery interviews Booking.com CEO Glenn Fogel on aggregator-related topics like antitrust. In some ways, it feels like Ben’s aggregation thesis was a little too successful: he successfully described why a particular business strategy is good, but maybe left regulators an impression that anyone running it is way more dominant than they actually are.

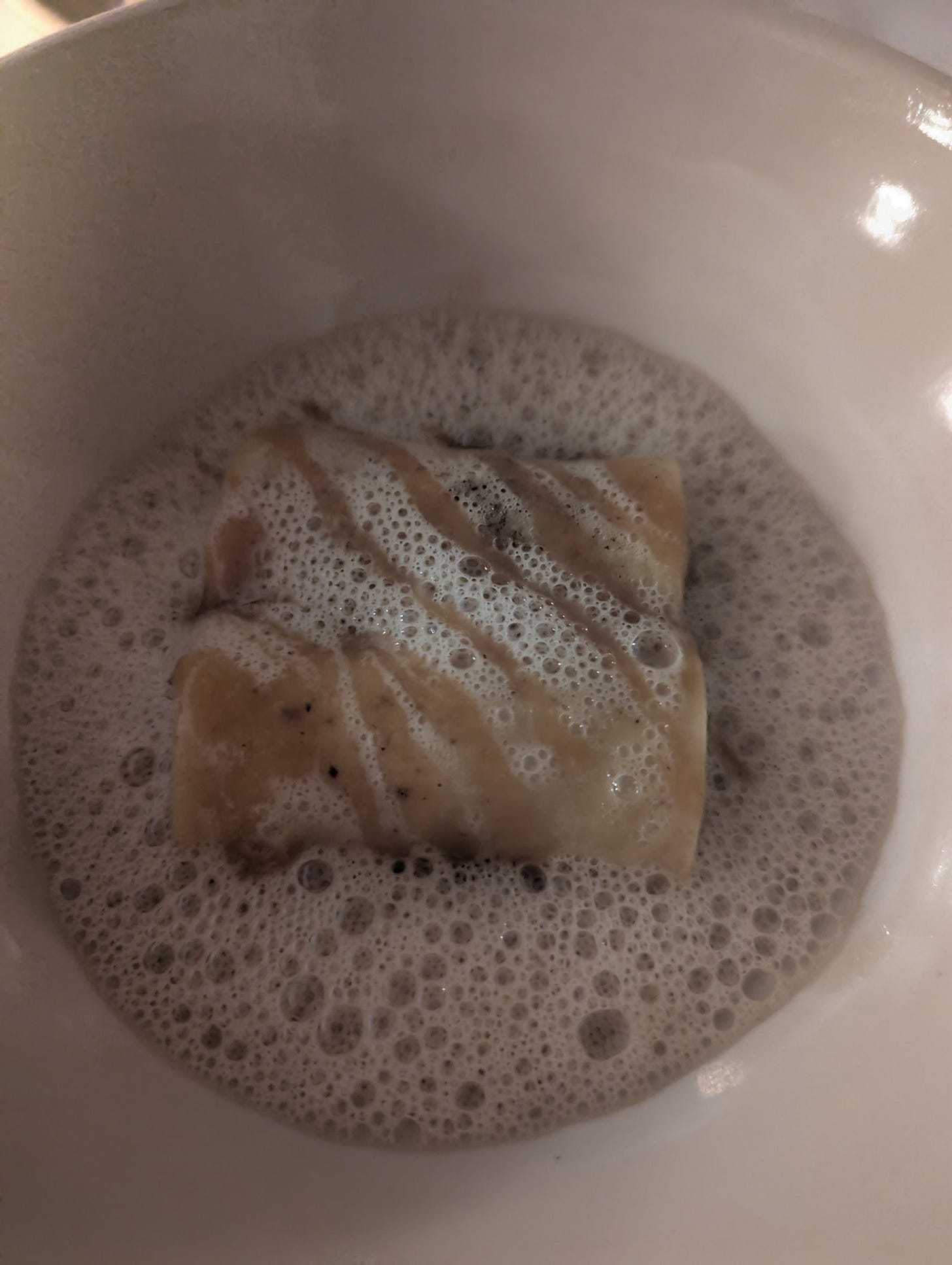

Tokyo Gourmet on the 2026 Tokyo Michelin Guide.

Thomas Pueyo suggests that warmer climates have lower relative productivity because the majority of the population tends to live in mountainous areas, which reduces the amount and influence of trade.

Kelsey Piper on how improvements in education in red states are underacknowledged.

Daniel Greco on the purpose of the literary canon, an extension of Naomi Kanakia’s take that absolute quality of each entry is less important than the fact that consensus exists on it’s inclusion. On that note, the latest Conversations with Tyler is with Steven Pinker on common knowledge for coordination purposes.

Ross Barkan on substack and free speech. This is also the subject of the latest Complex Systems podcast which interviews Chris Best.

Sam Enright linkthread.

Dynomight fun facts.